Watching AI Talk to AI is Fascinating, Scary and Observable

AI-to-AI conversations, decoded and on display. No black boxes. No guesswork.

In our recent article on Extreme AI Observability, we emphasized the critical need for transparency into how AI reasons and generates outcomes—an essential foundation for trusting Agentic AI. But there’s another layer to that observability, one that’s arguably even more compelling: watching AI agents communicate with each other in real time.

This isn’t science fiction. It’s real, operational, and happening at Charli today.

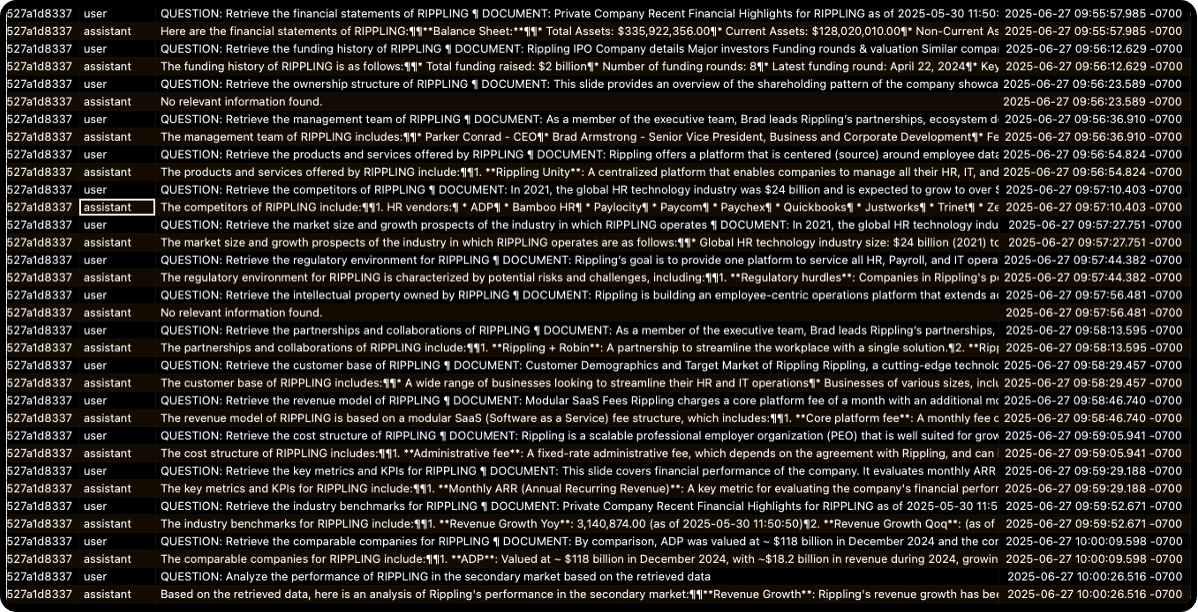

Following the publication of the Extreme AI Observability article, our AI Labs science team demonstrated what they call Agent-to-Agent Interrogation. It's an auditable sequence of reasoning between specialized AI agents—each with a defined role—engaging in a purposeful exchange of questions, answers, and structured data retrieval.

Unlike early AI experiments that devolved into incoherent, self-generated dialects, this system is focused, bounded, and directed. Every exchange is task-oriented, interpretable, and—most importantly—human understandable. You can literally trace the logic chain from one agent to the next. This represents a next-level advancement in Agentic AI, one that embeds the necessary guardrails for safety, governance, and compliance by design.

Why It Matters: AI That Reasons in Public

To truly augment human intelligence—not just automate tasks—Agentic AI must be able to explain itself. But that explanation goes far beyond Chain-of-Thought prompting in standard generative AI. In a multi-agent system, communication must be:

Transparent across agent flows and processing paths

Deterministic enough to trace reasoning steps with consistency

Contextually coherent across multi-step, multi-agent interactions

Fallback-aware when inputs are missing or incomplete

In Charli’s architecture, when an agent encounters insufficient data to continue its reasoning, that breakdown isn’t hidden—it’s observable. The Coordinating Agent identifies the information gap and dynamically activates specialized Task Agents to retrieve the missing context. That context may come from knowledge ontologies (dynamic in Charli’s case), enterprise systems, third-party APIs, or external knowledge sources.

This is not your typical RAG (Retrieval-Augmented Generation) setup. It’s a precision-tuned, multi-agent reasoning protocol—designed for real-time orchestration, verifiability, and robust enterprise integration.

Inside the Charli Agent Ecosystem

Our Agentic AI architecture is composed of distinct, transient agents, each with a scoped responsibility:

Coordinating Agents – Orchestrate the reasoning flow and manage dependencies

Task Agents – Retrieve and normalize data from diverse systems

Analysis Agents – Perform comparisons, detect patterns, validate hypotheses

Computation Agents – Execute quantitative models and derive outputs

These agents are short-lived, stateless, and composable. No exaggerated, human-level AI here—just purpose-built, API-driven components that can spawn, execute, and dissolve as needed. Need another layer of reasoning? Spawn a new agent. Need different data? Reconfigure the task parameters. It’s elastic, scalable intelligence—on demand.

Why Investors Should Pay Attention

This isn’t theory — it’s infrastructure. The ability to coordinate and observe AI reasoning in real time is essential for any mission-critical system. It ensures that AI can operate across complex domains while preserving context, verifying accuracy, and producing insights you can actually trust.

For any investor evaluating the technical moat behind Agentic AI, this is the anatomy of scalable intelligence—and it's what makes Charli not just an AI company, but a platform for the future of intelligent decision automation.